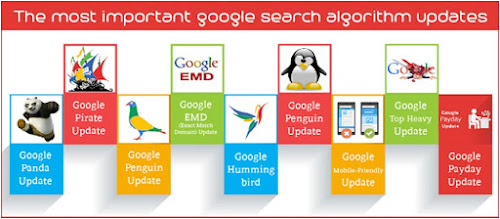

Google Strong Algorithm Updates

Google PANDA Update

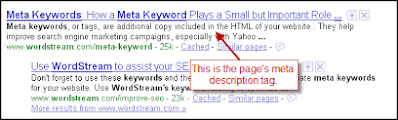

Google Panda update was the vital modification to the Google search results algorithm against content spamming. This update was done in 2001 on February 23. Panda update promises good quality search results by eliminating Low-quality content or Thin pages. This update has a crucial part in the ranking of web pages by Google. Any kind of content spamming will be caught by this update. Panda update provides a better user experience from Google.

Categories of Content Spamming

- Content duplication or Content plagiarism

- Low-quality contents, Spelling mistakes, Grammatical errors

- Content spinning

- Thin pages

- Automated contents

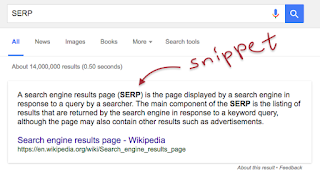

Later on, the Panda update had many updates. Panda 4.0 was the major update given to Panda update. By this update, Google decided to bring in the panda update to the main search results algorithm of Google. By the introduction of Panda updates, many thin page websites eliminated from SERP, and content-rich websites got an increase in ranking in SERP. So webmasters tried to put quality rich contents hence the quality of SERP improved.

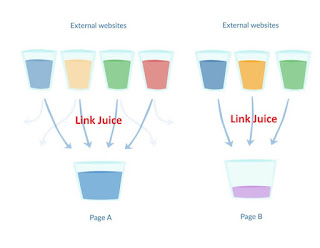

Penguin Update

After the success impact of the Panda update, Google comes with another strong update Google Penguin update. It’s launched in 2012 on April 24. Penguin update is introduced against Link spamming to get a quality search results on SERP.

Categories of link spamming

- Paid links

- Link exchange

- Link farming

- Low-quality directory link submission

- Comment spamming

- Wiki spamming

- High increase in guest blogging

A major update was given to the Penguin update is Penguin 4.0 update. After this update, it’s become a real-time filter of Google search results algorithm. After that whenever a link spamming is noticed the action by Google will be on time. It also improved the search results on Google SERP.

Pigeon Update

Pigeon update is one of the local algorithm updates of Google to provide the ranking of local search results on SERP. It also affects the search results shown in Google Maps. By this an update, Google able to show the local search results for the situation we need a local search result such as a restaurant, saloon, supermarket, etc. Criteria for doing local SEO

- The website should submit their full details on Google My Business

- The web page should contain all their contact information and address

- Add their contact number to local phone directory like Just dial, Ask dial

- Social media marketing preferably localized

Hummingbird Update

Hummingbird update is launched in 2013 and is announced after one month on September 26th. Unlike Panda and Penguin, Hummingbird update is not a place punishment-based update of update. It’s a significant update did on google algorithm to give the users a better experience on the Google search engine. By this update google tried to provide a descriptive kind of search result. Google aimed to understand the intention of the search and try to give the best search results.

RankBrain Update

Using Artificial Intelligence Google tries to provide results by understanding the situation of the search. Google confirmed the use of the RankBrain algorithm on October 26th in 2015. The search results will automatically update over location time, situation, etc.

Mobilegeddon Update

This update of the Google search engine algorithm is done on April 21st in 2015. In this update, Google aims to give more mobile-friendly website search results than desktop type websites since it’s difficult to handle on mobile phones. There are two major updates in mobilegeddon update i.e. mobilegeddon 1 and mobilegeddon 2.

Parked Domain Update

This update aims to eliminate the parked domain from SERP. A parked domain is a domain but it’s not linked with any websites. It means when you book a domain for future use and not planned to do anything on the website currently. Earlier times Google tries to put this type of parked domain on SERP but after this update, Google prepared to eliminate the parked domains.

Exact Match Domain (EMD)

This filter update is done in September 2012. EMD update is focused on eliminating the website having the exact same keyword as their name and having low-quality content. if the websites improved their quality and richness of the content then the sites may regain the rankings.

Pirate Update

Google pirate update aimed to prevent sites having pirated contents and is introduced in August 2012. We can compliant to Google by Digital Millennium Copyright Act (DCMA) implemented in US back in 1998. Pirate update or DCMA penalty cause changes in Google search engine directly to remove the pirated websites that violated the copyright rules and regulations.